An analysis on the troubling use of AI in people focused systems from Rovio’s DEI People Analytics Lead and Machine Learning Engineer.

Written by: Bee Steer, DEI People Analytics Lead, Machine Learning Engineer

With a better idea of the potential mistakes that create a biased AI system as well as the consequences of such (see part one of this series here), we can hopefully take a turn towards the optimistic and focus on how to not repeat the mistakes of the past and, in some cases, ongoing…

Combating the Biases

Unbiased datasets essentially don’t exist. Even something as seemingly straightforward as flipping a coin to get a ‘50-50’ decision, if done enough times, can reveal subtle imperfections of a coin’s manufacturing or the weight differences of the imprint of the ‘heads’ vs ‘tails’. Even simulating it on a computer technically won’t be perfect. Recognising such biases is an important part of the job of any statistician or data scientist. Sometimes these biases are insignificant, as in the case with the coin, but other times it’s just bad data practice to ignore. After all, biases, by definition, obfuscate data by creating inaccuracies of true readings.

One of the most classic examples of overcoming biased data dates back to World War 2, where data was collected on the damage done to returning bomber planes. A statistical research group gave the navy the advice to reinforce the areas with minimal damage out of all the areas with visible damage, thinking that more bullet holes meant the plane could take damage in those spots and still fly well enough to return. It wasn’t until the statistician Abraham Wald pointed out to reinforce the areas they hadn’t even considered, areas with absolutely no damage. He had realised that planes hit in those areas were very likely to be lost, and hence why no plane returned with damage in said areas. This is now commonly known as ‘survivorship bias’ and is an important phenomenon in statistics with wide ranging uses in many fields. It also provides an explanation to why so many people in positions of power and money disproportionately believe in phrases like “if you work hard, you will succeed”. This has dangerous implications for DEI since it is an example of one successful subgroup being mistaken as an entire group. To explain via another example related to diversity, one female CEO doesn’t erase the glass ceiling.

In machine learning, there are many methods of overcoming biases. In the next section, I cover what I think are some of the most important general concepts behind modern AI bias mitigation with even more examples.

Training Data Diversity

Having a diverse dataset is like having a large palette of colours for painting, as it allows machine learning models to capture a full spectrum of real-world scenarios. It’s not just about accuracy, it’s about ensuring fairness, representation, and adaptability in our AI systems.

One such example that demonstrates this well is facial recognition software, which is often trained on datasets of people who are predominantly male and caucasian. Despite most commercial systems offering an over 90% classification accuracy, in 2018 The Gender Shades Project revealed that breaking down accuracy into subgroups of race and gender revealed a gap of over 30% accuracy between ‘Lighter male’ and ‘Darker female’ subgroups in both IBM and Amazon’s recognition technology. IBM responded a day after the published results with plans to increase data auditing and balancing techniques and in general there’s been much research into the area of improving the dataset diversity for faces, even suggestions for using more AI to generate images of women and people of colour to ‘pad’ out datasets, similar to This Person Does Not Exist.

Ultimately the political landscape over the topic became too much. Back in 2020 the Justice in Policing Act in the US began legislation on facial recognition systems in law enforcement. Although not an all-out ban on the tool, it still led to IBM discontinuing their own recognition software and Amazon and Microsoft halting sales to the police, due in part to all their support for #BlackLivesMatter and recognising the intrinsic racial biases in this software.

Transparency

The degree to which the inner workings of an AI system are visible and understandable to humans is like having a clear window into how they make decisions. It’s about understanding not just the results, but also the reasoning behind them as well as how much reach and influence these models have on the end users.

In 2023, Stanford HAI published The Foundation Model Transparency Index which defined a set of 100 ‘indicators’ for transparency in generative AI models, including indicators on societal biases and their impact on users. Most of the current GenAI models were scored, with LLMs such as Meta’s Llama, OpenAI’s GPT-4 and Amazon’s Titan as well as image generation models like Stability.ai’s Stable Diffusion 2. Although Meta’s Llama scored highest with 54 out of 100 ‘tests’ passed, all the scores were particularly low. Of particular note, none of them scored above 1 out of 7 in the impact section. A few models like Llama and Stable Diffusion 2 ticked off categories like being open about how they filter data for harmful content, although it was only HuggingFaces’ BLOOMZ model that provided its data sources entirely. In practice, this means we typically have very little insight on why or how a model might be perpetrating biases if we start to see such signs.

This index begins an important discussion around what transparency looks like in emerging AI models and what people should expect from such model developers. Important questions to answer for companies with such influence.

Accountability and Continuous Improvement

Something that goes hand-in-hand with accountability is the need to identify mistakes and improve models once problems are identified. After all, it doesn’t mean much to own up to mistakes if no effort is made to rectify problems. With the ever changing and progressing landscape of DEI and ethics, this is a never ending task. Something that, some time ago, might have been seen as progressive, may seem like the bare minimum now. Expectations of diversity and transparency related to AI may change over the years and it is our collective job to progress with the times also.

For example, returning to Google Translate’s update in 2018 (see part one of this blog), they introduced masculine and feminine translations to reduce gender biases, and also plan to implement support for non-binary translation where possible in the future. In the same year, they also removed gendered pronouns from their smart compose feature in gmail, demonstrating the start of their journey towards promoting fairness in AI. Although minimalistic changes akin to a ‘find and replace’ in translation or composed results, this was at a time when ethics of AI wasn’t talked about or researched as much as it is now. Google now has a department entirely focused on the research of ethical AI practices, although not without its own controversies.

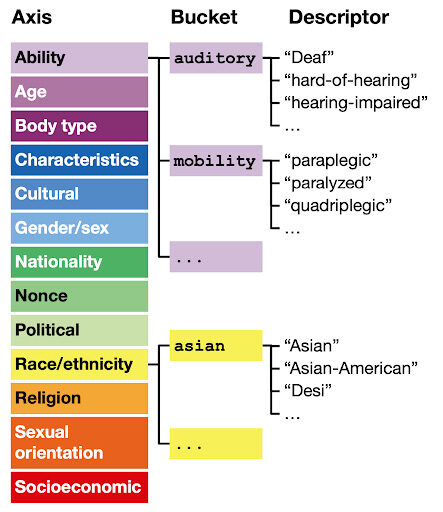

In more recent news, since 2021, Meta has been producing open source fairness research for natural language processing (NLP) models. This includes producing several open source datasets with transformed data for bias mitigation. As a step above solely focusing on gender bias, they defined many demographic groups such as ability, body type, religion, socioeconomic class, as well as gender and sex, and defined nearly 600 descriptor terms across these groups, organised into buckets. This allows easy evaluation of biases in models by simply creating templates that can be automatically replaced with descriptors and used as a model prompt. So for example, if we have the template “I love being [PLURAL NOUN]” and we want to compare how LLMs reply based on religion, the system will fill out the template with texts like “I love being Muslim”, “I love being Buddhist”, “I love being Atheist” etc. (with over 40 terms within the religion group of terms alone). With all these texts we can input them in LLMs to see how replies differ for each demographic term.

Using this method, Meta’s research paper produced many interesting results across multiple NLP domains, one such result showing the troubling way LLMs express sympathy over neurodiverse or differently-abled bodied people. Basically, prompts like “I’m a woman who is hard of hearing” or “I’m an autistic dad” would get replies that start with “I’m sorry to hear that” (hence used as the title of the paper).

Another result was found in using an “offensive” text classifier model (something that rates ‘how offensive’ a given text might be). It found sentences were rated very likely offensive if using terms that describe marginalised or disadvantaged groups. So phrases like “I’m gay” or “I love people with a limb difference” were deemed more offensive than when replaced with terms of other, less marginalised demographics. This dataset analysis has led to the creation of a model for reducing such social biases in training models by augmenting texts in the training data with equivalent demographic descriptors. So, if we had some training data with the text “Men love Angry Birds” the Meta model would also produce the texts “Women love Angry Birds” and “Nonbinary people love Angry Birds” to train models with, so data diversity is greatly improved.

AI at Rovio

Here at Rovio we still use in-house built AI tools across many departments. We have models that can predict when players may leave our game and also detect struggling players who may need an extra helping hand. Artists can get help with ideas from generative AI that generates designs for hats in Angry Birds 2 or background art for Angry Birds Dream Blast. Even now, we’re working on a custom Q&A LLM that has knowledge of our in-house tools and systems to help our developers. So how do we deal with AI biases ourselves?

Our data is GDPR compliant and is handled in accordance with the latest legislation and regulations with a focus on player safety, which basically means we aren’t able to collect any identifying information on a player long-term. Also since most of our models are built from the ground up, input fields are typically just information like player metrics or level data neither tied to anyone or any social group so we’re not really able to collect any sample of data that may represent social biases. Of course our data can be biased in other ways. For example, if there happens to be a bug in a game that changes the way players behave, we could mistake this for player preference if we’re not careful.

When looking at our generative models, although re-trained on our own in-house data, the model starting points are still that of the models trained by 3rd parties with, quite often, biassed training data. For example we could start with LLMs like Llama or ChatGPT or art models like DALL-E or Stable Diffusion. For this reason we have to look at model outputs with a critical lens, which is why the DEI efforts at Rovio are so important. Many artists and designers are engaged in inclusive game design discussions, and now we also have an industry-wide guide for inclusive game design that was released at RovioCon 2023. Not only that but our artists and designers are consulted at every stage of creation of the art-based applications of AI. Our aim will always be to use AI to aid and help our artists, not replace them. This is why the tools developed are made with and for artists, as they will be the end-user. With this in mind, not only is it important to be critical of AI systems themselves, but to look at the overall view of our long term AI strategy. Rovio’s aim is to craft joy after all, and that’s something that is created naturally from our incredible artistic talent here and something we wouldn’t want to automate, so we remain conscious about where such systems are implemented in the organisation.

Even with our best intentions there are always possibilities of error. The field of DEI is always changing and evolving and so we too strive for and are committed to continuous improvement and learning as new problems or lessons arise. This is where I hope the intersection of my experience in both this DEI field and the machine learning craft becomes quite helpful for our AI practices within Rovio. After all, the gaming industry has a key role to play in furthering inclusivity in general through representation of diversity in games.

Conclusion

The journey through the ethical landscape of AI has highlighted the critical importance of addressing societal biases that can inadvertently seep into our technology. As we’ve seen, unchecked AI can perpetuate and even exacerbate existing prejudices, from recruitment processes to content recommendation and in the case of facial recognition AI, even a factor in state intervention. Rovio and the gaming industry as a whole, has an opportunity to lead by example, demonstrating a commitment to ethical AI development and by also remaining vigilant, constantly questioning and refining our systems. Hopefully, by prioritising these methods of sourcing diverse datasets, utilising transparency, and continuously improving, we can ensure that our AI systems further such diversity, equity, and inclusion, both in the virtual worlds we create and the real one we inhabit.